We find ourselves in the fifth industrial revolution. In this article Mark Knell looks at the move from this current age of digitalization to the next socio-technological transformation, one anchored in nanotechnology, biotechnology, quantum computing, and artificial intelligence.

Mark Knell, Research Professor, NIFU

The Space Race was a defining moment in my childhood. While I was not concerned about the competition between cold war rivals at this time, I had eagerly awaited every spaceflight up to Apollo 11 and beyond. I did not realize what technical achievements were necessary to achieve these milestones, nor did I recognize that a technological revolution was about to happen.

The digitalization of society

Over time, I experienced a succession of small evolutionary changes, which led to the all-inclusive digitalization of the economy and society. The digital revolution happened with a big bang, but it was the transformative character of the techno-economic paradigm that led to the development of personal computers, digital control instruments, software, and application of integrated circuits in a wide variety of innovative products and services.

But the digital revolution was bubbling underneath the golden age of the automobile and mass production. As a child growing up near Detroit in the 1960s, I admired the automobile, and all the consumer trappings that came with it. And I also remember going to the Henry Ford – a wonderful museum that exemplifies the fourth industrial revolution and Edison’s laboratory.

The discovery of the vacuum-tube as an on-off switch in 1935 anticipated the digital revolution. Later research by Alan Turing, Claude Shannon, Howard Aiken, and John von Neumann led to the invention of the transistor in December 1947, when scientists showed the first point-contact transistor amplifier.

Over the next two decades, Bell Labs developed several diverse types of transistors, including the silicon transistor and the MOS transistor. A laboratory-invention phase that included many prototypes, patents, and early applications marked the beginning of an emerging revolution, which began at Bell Labs but now found within a vibrant electronics cluster in the Santa Clara (Silicon) Valley.

Intel announced the first commercially practical (4004) microprocessor in November 1971, the same year the US department of defence installed the first computers on ARPANET (which later morphed into the Internet).

This microprocessor made it possible to incorporate all the functions of a central processing unit (CPU) onto a single integrated circuit. The Apollo Guidance Computer was the first silicon integrated circuit-based computer and anticipated the microprocessor. Then news about Apollo 11 mission completely drowned out the announcement by Intel. [1]

Computing as a general-purpose technology

It did not take long for the microprocessor to become a general-purpose technology. [2] Soon afterwards several new technological trajectories appeared within the digital techno-economic paradigm, which evolved into clusters of new and dynamic technologies, products, and industries that rippled through the entire economy and society.

New enterprises emerged and interacted with each other in complex networks, which perused problem-solving activities that were cumulative, incremental, and path-dependent. [3] This led to the development of a global digital telecommunications network and the internet, together with electronic mail and other e-services. [4]

The digital revolution was truly transformative. Apple, Alphabet (Google), Cisco Systems, Microsoft, and Facebook became large corporations early in the digital revolution. Universal availability of the microprocessor, rapid growth in computing power and later extensive use in a wide variety of products and services both accelerated and enabled the growth of networked enterprises.

Moore’s Law assured the number of transistors in an integrated circuit doubled about every two years. Today the Apple IPad air uses a 5nm processor with 11.8 billion transistors. The original Intel microprocessor had only 2,250 transistors.

The second half of the digital revolution

We are now in the second half of the digital revolution. The financial collapse of 2008 marked the turning point, as power shifted away from financiers to entrepreneurs and enterprises, and currently we should be in the period of high economic growth, or the golden age. [5]

Here diffusion is key, as is the complete digitalization of everyday objects and activities, or what we might refer to as ubiquitous computing. A wide array of new products dependent on microprocessors have appeared in recent decades, including robotics, artificial intelligence (AI) and smart energy networks.

Robotics and AI became key technologies in the second half of the digital revolution. [6] Modern robots can be autonomous or semi-autonomous, appearing human-like at times, but most often they are just a complex industrial machine with little guiding intelligence.

These included industrial robots, warehouse robots, agricultural robots, autonomous vehicles, caring robots, medical robots, and robots in education. A few robots have AI, but that are narrow and limited by the inability to deal with common sense solutions to everyday problems.

The next revolution

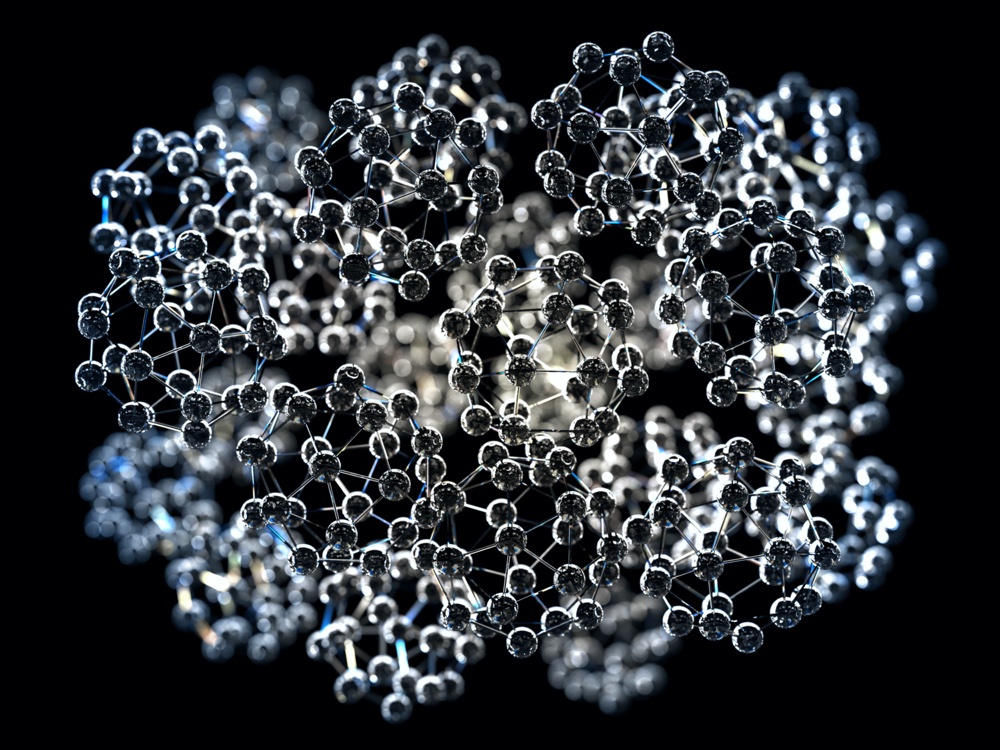

Can we envisage the future and the sixth industrial revolution? Possible emerging technologies include nanotechnology, biotechnology, quantum computing, and AI. This is where the physical, digital, and biological worlds could converge. [7]

Inspiration for the idea originates in Richard Feynman’s 1959 lecture, «There’s Plenty of Room at the Bottom». Here Feynman described a process in which scientists would be able to manipulate and control individual atoms and DNA molecules. [8]

The idea of the transistor and the microprocessor started the process of miniaturization. Most notably, Feynman had anticipated nanoscience and nanotechnology, which became the study and application of extremely small things and can be used across all the other science fields, such as chemistry, biology, physics, materials science, and engineering.

Quantum mechanics is an essential catalyst in the development of quantum nanoscience and quantum biology, and it will be a platform for studying quantum technology and quantum computing in the next technological revolution.

Recent advances in super-resolution microscopy, molecular (nano) machines, and cryo-electron microscopy received Nobel Prize awards between 2014 and 2017. The highest resolution microscope can measure up to 0.39 Ångströms, which is almost three atoms in size.

It is possible that two or three independent technological systems could converge into one system, which would then trigger the explosive take-off into the sixth technological revolution.

Atoms, DNA, bits, and synapses will supply the basic elements and foundational tools that will make it possible to integrate several emerging technologies, including nanotechnology, biotechnology, information technology, and the latest cognitive technologies, into multifunctional systems. [9]

Anticipating a future involves changing the techno-economic paradigm. Are we ready for the age of the quantum? Will modern microscopy lead the way? Will we see a convergence across a range of disciplines in anticipation of the sixth industrial revolution?

Will the manipulation of DNA molecules and the rearrangement of atoms reorganise our societies, our values, the economy, and the environment as well? Can we imagine scenarios that capture both opportunities and threats, negative as well as positive effects?

These kinds of questions are essential for developing a futures literacy. The anticipatory systems view makes it possible to integrate the future into the present by formulating diverse ways and several reasons for thinking about the future. [10] Traditional foresight models do not predict novelty, disruption, complexity, or a shift in paradigms. This requires a new framework for connecting the theories and practices of ‘using the-future’ and appreciating complexity.

REFERENCES

[1] Isaacson, W. 2014. The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution, Simon and Schuster.

[2] Bresnahan (2010) describes a general-purpose technology (GPT) as having three characteristics: pervasiveness, technological dynamism, and dynamic complementarities. Possible emerging GPTs in the sixth industrial revolution include nanotechnology, biotechnology, quantum computing, and AI.

[3] Nelson, R.R. and Winter, S.G. 1982. An Evolutionary Theory of Economic Change, Harvard. Dosi, G. 1982. Technological paradigms and technological trajectories. Research Policy 11:147-162.

[4] Freeman, C., and F. Louçã 2001. As Time Goes By. From the Industrial Revolution to the Information Revolution, Oxford: Oxford University Press. Perez, C. 2002. Technological revolutions and finance capital: The dynamics of bubbles and golden ages. Cheltenham: Edward Elgar.

[5] Perez, C., 2013. Unleashing a golden age after the financial collapse: drawing lessons from history. Environmental Innovation and Societal Transition 6, 9–23.

[6] Hudson, J. 2019, The Robot Revolution, Edward Elgar. Mitchell, M. 2019, Artificial Intelligence: A Guide for Thinking Humans. Pelican Books.

[7] Some researchers believe that AI is already a GPT. This idea goes back to Alan Turing in 1950 and early software applications. Current AI architectures, however, have limited cognitive capabilities that depend on classical machine learning. Future AI may depend on the intersection of quantum physics and machine learning. See Agrawal, A. K., Gans, J, and Goldfarb, A. (eds.), 2019. The Economics of Artificial Intelligence: An Agenda, University of Chicago.

[8] Feynman’s lecture, “There’s Plenty of Room at the Bottom,» was originally published in the February 1960 issue of Caltech’s Engineering and Science Magazine. An updated discussion of the lecture is found in Daukantas, P. 2019, “Still plenty of room at the bottom”, Optics and Photonics News, July/August.

[9] Roco, M.C., and W.S. Bainbridge. 2003. Converging Technologies for Improving Human Performance: Nanotechnology, Biotechnology, Information Technology, and Cognitive Science. Springer. Over time nanotechnology may improve productivity, reduce costs, new infrastructures, and encourage the creation of new products and processes using nanomaterials. See also Knell, M. 2010. Nanotechnology and the Sixth Technological Revolution. In: Cozzens S., Wetmore J. (eds) Nanotechnology and the Challenges of Equity, Equality and Development. Springer.

[10] Miller, R. 2018. Transforming the Future: Anticipation in the 21st Century, Routledge.

Top photo: A nanoparticle is usually defined as a particle of matter that is between 1 and 100 nanometres (nm) in diameter. A nanometer is a thousand-millionth of a meter. Photo NiPlot.